- Joined

- Dec 18, 2019

- Messages

- 6,542

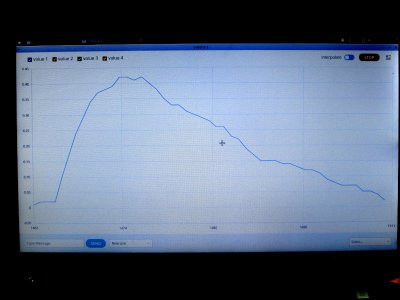

Now things are getting very interesting!

I don't think so, the circular buffer is 512 values deep. At 360KSPS that's 1422Is the "straight line" part simply that the ring buffer length ran out shortly after the peak? No matter, so long as we get the peak!

No it doesn't. Something isn't right. It's like some signal is missing, or some samples got rejected.But that falling edge doesn't look very much like exponential decay, does it.

I found that one too. I may take a closer look at it, but I also just bought a little standalone TFT display from Adafruit that has decent resolution, a reasonable price and only needs an SPI interface. And there's a library available for it.Don't know if this is useful or not, but it seems to be a python based plotter for this sort of use. Should run on linux, macs & windows. I have had to do something similar because the built in serial plotter is very limited and not well documented. Seem to remember a buffer length of 500 or something weird like that. I had 1K FFT's I wanted to look at!

GitHub - taunoe/tauno-serial-plotter: Serial Plotter for Arduino and other embedded devices.

Serial Plotter for Arduino and other embedded devices. - taunoe/tauno-serial-plottergithub.com

I think it's a bit of misinformation to state that python is always slow. If you use the numpy and scipy libraries it isn't. It's at least as fast as Matlab. Both those libraries are vectorized and have been optimized in C++. So like Matlab you can do element by element multiplication or matrix math of any sort, including SVD and stuff like that. (Singular Value Decomposition)While Python code can be persuaded to play nice, it takes some skill to make it so, especially when it comes to loops in code with other loop conditionals. Avoid in favour of Java, or C++ if possible. I do not say this as a expert in programming, because, despite that I have at some stage meddled with most computing languages and platforms starting with 6502 hex code, through FORTRAN, Pascal, C, C++, etc. ending up with Python and Java, that is my weak area. I tended to suck at all of them!

This was advice from my son, who definitely is programming professional, with a math Masters from Exeter. He wrote an entire satellite system data management and tracking control suite that runs 24/7 in dozens of places on the planet. He points out the difficulties with Python, and why It takes deep experience to avoid Python code running slow, and with a variable loop execution time.

In Raspberry Pi, various Python tools and development environments are useful as a fast way to get up a final application, and publish code other experimenters can just load up and use, and Python can, if done right, be very powerful, but I have had trouble making it so. I have an old A/D converter Pi "hat" add-on that was supposed to control a signal generator chip to make a sweep signal analyzer. The Python code ran so slow it was simply dysfunctional. Admittedly, the originator should not have used loops within loops the way he did, but it took my son only minutes to get up (Java) code that was running at several MHz!

Python is handy as controller that relies on calling up much faster, better code which handles critical areas. PyMCA will be OK, because it analyzes and presents the plots as a separate, post-processing action working on files already assembled by the data gathering code. It is not part of a time-critical thing, and does not snatch execution time from real-time sampling processes in a way that makes the high priority loop execution time variable.

When we attempt to be snatching data on a continuous basis, it is important that the timing loop be constant, and have a portion that departs to execute little pieces of slow stuff like a plot display someone is looking at, or mouse input someone is doing. We won't notice it, because the loop completes in something less than 5mS. This is not something that interpreter languages like Python or BASIC are good for. That is not to say don't use ithem They are great for fast, easy development of the user interface. Just don't let them be in the way of critical timing data logging code.

The approach of letting the sampling and stashing functions interrupt the display plotting code should work just fine.

What Mark is attempting is extremely challenging, and I am in awe of it!

Why is Python so Slow?

Why People Use Python Even If It’s Slow